Guides to Quality in Visual Resource ImagingJuly 2000

In 1998, the Digital Library Federation, the Council on Library and Information Resources, and the Research Libraries Group created an editorial board of experts to review the state of the art in digital imaging of visual resources (original photographs, prints, drawings, maps, etc.). While sources for instruction in digitizing text or text and images existed and were growing, none specifically addressed the challenges of two- and three-dimensional, as well as color-intensive, materials. Charged to identify imaging technologies and practices for such visual resources that could be documented and recommended, the board arrived at a set of guides in the science of imaging-objective measures for image qualities and how they can be controlled in various aspects of the imaging process. With detailed outlines created by board members, DLF and CLIR commissioned board-recommended authors, and have published the guides on the Web with RLG. These five guides are designed to serve the growing community of museums, archives, and research libraries turning to imaging as a way to provide greater access to their visual resources while simultaneously preserving the original materials. They will be updated periodically. Your comments are encouraged by DLF and RLG. Museums, archives, and libraries worldwide are converting visual resources into digital data, and in each case managers of those conversion programs face the same series of decisions about how to create the best possible image quality. These guides bring together the expertise and experience of leaders in the field of visual and color imaging and make their knowledge widely accessible. The guides are written for those who have already decided what they will digitize and what purposes the digital images will serve. After the often-complex matters of selection have been settled, these guides address the steps to successfully create and store high-quality digital masters and derivatives. They include project planning, scanner selection, imaging system set-up, and the resulting digital masters. Guide 1, planning, underscores the importance of defining the users' needs and requirements before undertaking the project. Best practices in digital visual resources include creating images that meet quality and use objectives and documenting how the image being delivered was created. Guide 2, finding the right scanner, starts from a knowledge of the source material to be scanned and, by looking at how to interpret product specifications and employ verification tests, equips users to evaluate new machines as well as those now on the market. Similarly, for setting up the larger system of scanner, camera, operating system, and image-processing software, guide 3 provides information, techniques, and procedures that can be used now and into the future. Guide 4 deals with digital masters, focusing on developing 'visual literacy' in digital imagery and quality assurance; and guide 5 addresses the effect that different file formats-the containers-have on the performance and persistence of digital masters over time and technological change. While there are few universally applicable answers to the questions faced by those who plan and carry out visual imaging projects, the writers of these guides identify critical decision-making points and offer concrete guidance based on the purposes of the images. Where possible, they provide objective measurements of image quality. At the same time, they flag areas where further research and testing are needed before specific practices can be recommended. Each guide is a module that can stand on its own to be mined for information. As a set, the guides provide guidance on how to find what you need to accomplish your stated goals with the available technology, whatever its state of evolution. And they help to clarify the consequences of trade-offs that all managers must make to stay within their means. In providing this framework, from planning to digital output and perpetuity, this publication is intended to offer value both to practitioners and to those who must judge whether a digital imaging effort is feasible, well planned, and worth supporting. Guides to Quality in Visual Resource Imaging

|

||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| Staffing | Description | In-house project | Outsourced project |

| Project managers (internal) | Internal project managers are required to manage project goals and institutional expectations, identify staffing and equipment costs, coordinate archival and access needs across departments, and adapt the digital plan as necessary to achieve success. | x | x |

| Vendor project managers | Vendor project managers run the digital operation and allocate appropriate staffing and expertise to the project. | x | |

| Photo services staff | Institutions with an internal photo services division should use it to manage, operate, and maintain the digital project. If the project is outsourced, photo services staff must closely interact with the vendor. | x | x |

| Curatorial and archives staff | Internal curatorial or archives staff members, or both, identify project goals, choose objects for digitizing, identify preservation concerns, and project the short- and long-term uses for the digital images. | x | x |

| Conservators and preservationists | In-house conservators or preservationists should review the project before it begins and identify conservation and preservation concerns. | x | x |

| External consultants | External consultants may advise on digital studio setup, system integration and networking concerns, archival storage issues, color-management needs, and other matters. | x | x |

| Grant writers | Grant writers may be needed to write proposals to secure funding for the project. | x | x |

| Computer/technology staff | Computer and technology staff set up the system, resolve network issues, design storage systems, and performs similar tasks.An institution that does not have these resources must secure outside resources to set up the computers and networks and to handle maintenance. | x | x |

| Preparators and art handlers | Preparators and art handlers prepare and transport objects to the studio for digitizing. An institution dealing with surrogates may not require this type of staff. | x | x |

| Quality and production managers | These supervisors set and maintain image quality-control standards and production goals. Their functions should be separate from those of the scanner or camera operators and technicians. | x | |

| Scanner or camera operators and technicians | Scanner or camera operators and technicians capture and edit the original object or surrogate. | x | |

| Post-processors | Once a digital image has been captured, it is passed to a post-processor, who processes it on archival storage mediums and prepares it for short- and long-term use. | x | |

| Administrative assistants | Assistants create and maintain archival logs and keep track of the metadata information to ensure that the digital process is documented and that the documentation can be searched for easy retrieval. | x | x |

| Vendor services for digital capture, post-processing, and administering logs | Significant vendor costs will be incurred for digital capture, post-processing, administering logs, and equipment use. Often this cost is subsumed in the per-image cost. | x | |

| Equipment | |||

| Digital evaluation costs | Before the project begins, one has the option of renting digital equipment (e.g., digital camera backs, flatbed scanners, and lighting) for testing and evaluation or setting up tests at the outside vendor location. Note: Some institutions may decide not to test as this can be expensive and time-consuming. | x | x |

| Digital capture equipment | Equipment must be purchased to digitally capture the original object or surrogate. | x | |

| Copystand or cradle or flatbed scanner | If using a digital camera back (attached to a traditional camera), one needs a customized copystand or cradle to hold the object being captured. A flatbed scanner must be set up properly or customized for the project. | x | |

| Computer equipment and server setups | Image processing computers capable of high-volume data throughput are the backbone to the digital capture, post-processing, and editing stations. They are also needed for the administrative stations, and to review and check image quality, keep metadata logs, and retrieve images from the archive. In addition, servers are needed for networking the computers within and outside the studio. The number of computers required depends on whether this done in-house or is outsourced. | x | x |

| Lighting | The appropriate lighting for digital capture (e.g., halogen, HMI, strobe) must be chosen. | x | |

| Voltage regulators | Voltage regulators are required to control fluctuations in scanning caused by lighting or electrical jumps. | x | |

| Lightbox | A lightbox is used to view and compare originals or surrogates with the digital images. | x | x |

| Conservation items for the studio | Conservation items include air filters, air conditioners, white gloves, and lab coats. | x | |

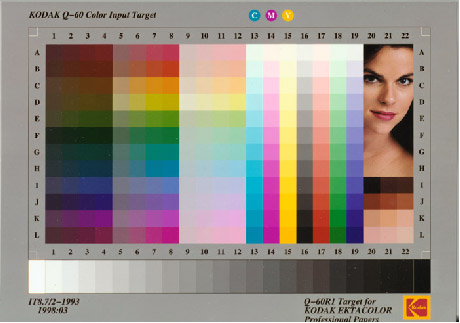

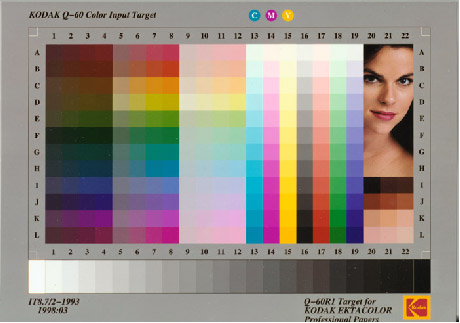

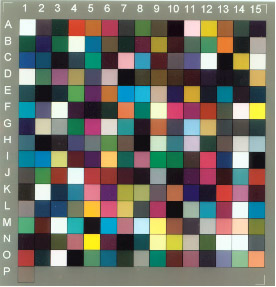

| Color management controls | Tools for controlling color management in the studio include targets, gray-scale and color bars, densitometers, calibration equipment, and profiles. | x | x |

| Post-processing equipment | Post-processing equipment includes tape drives and CD burners. | x | |

| Archival storage mediums | Equipment used to archive the digital images includes CDs, tapes, and near-line network storage. | x | x |

| Transfer mediums | Equipment such as ZIP, JAZ, and intranet or Internet connections is needed to transfer images outside the studio. | x | x |

| General supplies for digital capture | General supplies include extra light bulbs, storage disks, and office materials. | x |

Regardless of whether the work is done in-house or is outsourced, a significant number of in-house staff members are required to manage the project. In addition, over time, the cost of vendor project management and outsourced digital capture and post-processing services can equal or exceed costs of an in-house project.

Many of the costs noted in Table 1 will be incurred at the beginning of the project. Once the project is under way, most of the expenses are for maintenance and support to sustain the operation. Digitizing is labor-intensive, and a project involving a large quantity of materials (thousands or millions of objects) can take years to complete. Ongoing maintenance may include the following:

- paying staff or vendors to capture and archive the images;

- upgrading digital and computer equipment annually or biannually;

- paying unanticipated expenses as the project matures, such as expanded outside vendor services, consultants, and additional staff or equipment; and

- weekly technical maintenance on imaging computer equipment, system integration, networking, and archival storage system management.

2.5.2 Managing Cost Implications

Because funds are finite, it is useful to ask whether compromises can be made in any area. The answer depends on the details associated with the project's scope, its intent, and the nature of the source originals. It is important to be informed about the consequences of cutting costs in various ways and to be aware of the costs associated with every aspect of the imaging process.

Although the expenses for digital projects may seem overwhelming, there are serious implications to cutting corners. A decision to reduce spending in one area can adversely affect other aspects of the project. For example, if an extra staff person or extra computer is cut, production will be slowed, other things being equal, and this will cost money in the end. Although cutting costs may appear to be a straightforward task that is based on a cost-benefit analysis, it could be difficult to calculate long-term revenue 'lost.' Managers should have several projected scenarios of long-term revenue figures; this will enable them to consider the consequences of cutting costs on the basis of alternative assumptions.

If one cannot afford all the costs up front, it will be necessary to determine how many images can be digitized per year without sacrificing quality and then to designate a longer period to complete the project. It is better to set modest goals than to create unrealistic expectations. Unforeseen expenses are inevitable and may occur as early as the first three months of production. They must be borne in mind when projecting how long it will take to digitize the source material and the costs associated with that length of time. Often, if a projection is evaluated during the stages of testing and evaluation, the project manager will have a general idea of how best to budget for a project. Spreading out the cost over several years can often fit nicely into a funding proposal.

2.5.2.1 Quality versus quantity

A common issue in managing the finances of a digital project is how to achieve the proper balance between quality and rate of production. Cutting corners in quality creates the risk of having to re-digitize some images, and this risk should be minimized. It is always preferable to reach a well-defined and objective measure of affordable quality, as defined not only in terms of image resolution but also in terms other metrics. The whole image processing chain has to be examined. Besides issues concerning the system for digital capture, one should review compression, file formats, image processing for various uses, and system calibration (Frey and Reilly 1999).

Objective measures for defining a level of quality are useful for documenting how the source material was captured, in terms of tone, detail, noise, and color reproduction (Frey and Reilly 1999). Other standards, such as those related to using a consistent file size and format and recording the digital camera settings, can also be valuable. New formats such as Tag(ged) Image File Format (TIFF) and encapsulated postscript (EPS) will automatically embed the scanning device information into the image file. Given the range of standards that have emerged, the learning curve for selecting appropriate standards can be steep, and seeking the help of a consultant can be expensive. Nevertheless, the organization that has used standards will have greater assurance that the source material being digitized today can be migrated properly and used in the future. The standards must be documented so that the person who wants to use the digital images years from now will have measurements to return to. Organizations that are using an outside service should make sure the vendor provides this documentation with the digital image.

Quantity, or achieving a desired rate of production, is also important to a successful project. An efficient workflow can raise quantity without sacrificing quality because it minimizes disruptions in flow of digital capture, editing, and processing. This translates into better handling of objects and scanning-operator satisfaction, as well. Understanding efficient workflows will help in evaluating both in-house processes and outside services. Achieving an efficient workflow is discussed in more detail in Section 4.3.

2.5.2.2 File size

It can be quicker to capture low-resolution files. They are appropriate if the use is limited to a quick identification shot. However, if the goal is to create an image that can be archived for many uses, there are other factors to consider regarding image capture. Does capturing a midsized file (e.g., 18 MB), as opposed to a high-resolution file (e.g., 70-100 MB), save time and money? Ironically, such a difference in capture size does not significantly change the number of images one can capture per day or per week. This is because most of the time is spent setting up the shot, editing the work, processing images on storage mediums, backing up the files, and changing the camera. This is the case for in-house as well as outsourced services. Therefore, it is often advantageous to digitize at the higher MB size, assuming one's goals for size are not excessive (with available technology, anything larger than 100 MB per image may affect production goals).

Regardless of what size is to be captured, an analysis of server systems and storage-management schemes for backup and archiving is necessary to determine the potential costs of storing and migrating these files. It takes longer to open and edit a large image file than it does a small image file. It also takes more time to view multiple images simultaneously when files are large. Therefore, there are cost implications in terms of computer upgrade requirements of RAM, VRAM, additional hard drive space, and processing power. (Larger file sizes will cause similar problems in a networked environment as well.) Often, additional computers are needed to isolate the tasks of capturing and editing large files so that the computers can efficiently handle the imaging tasks pertinent to large files. Because additional computers, upgrades, or network needs can become necessary sooner than is expected (often within the first three months), it is wise to budget funds for such expenses.

2.5.2.3 Computer costs

Unplanned computer costs often relate to managing the image workflow in the studio and are based on problems that arise during the production stage. The following scenarios describe unexpected computer problems that would incur additional costs:

- Scenario 1: The scanning and editing computers are incompatible and cannot pass images properly from one system to another. Additional costs are incurred for technical help and possible restructuring, such as adding or subtracting computers in the workflow.

- Scenario 2: The amount of data being produced is causing the computers to fragment on a weekly basis. Costs such as those in scenario 1 may be incurred.

- Scenario 3: One or more computers crash for an undetected reason, stopping scanning for several hours. The technician recommends buying a new computer and isolating it from the production loop.

3.0 Analyzing Characteristics and Conditions of the Source Images

As discussed in Section 2, a complete needs analysis addresses the scope and goals of the digital project. But the best-laid plans can go awry if the characteristics and conditions of the original or source surrogate are such that they prevent digital capture. An assessment of the source images includes an estimate of the number of objects to capture, examination of their formats and characteristics, identification of the critical features that need to be retained (e.g., detail and pictorial content), and an evaluation of their condition and disposition (to decide, for example, whether to retain or dispose of the 'original' source material). The results of this analysis will affect the decisions about handling the originals during digital capture, the methodology to choose (e.g., whether to capture from the original or from the film intermediate), and the appropriate digital equipment for the image quality required. [6]

3.1 Estimating the Number of Images to be Captured

The total number of objects to be digitized is actually less important than the number of works of a particular source format to be digitized. In a hypothetical collection of 50,000 works, 5 percent may have existing film intermediaries (i.e., slides, transparencies, or copy prints) while the rest are original materials. Of the original materials, most are 8' x 10' silver gelatin prints, and the rest are oversized (30' x 40') color photographs or daguerreotypes. These numbers suggest that scanning existing film intermediaries would complete only a small percentage of a project. The project manager needs to decide whether to create new film intermediaries for 95 percent of the originals and then scan them or to capture directly from the original materials. The latter would require equipment capable of handling the various sizes, and this, in turn, affects the selection of equipment.

3.2 Source Formats: Film Intermediaries Versus Original Sources

In analyzing the condition and characteristics of the source to be digitized, it is important to consider its format. When scanning from intermediaries, the formats could include slides, transparencies, copy prints, and microfilm. When digitizing from the original source material, it is necessary to determine whether the originals are black- and-white or color photographs, glass-plate negatives, drawings, prints, or maps.

When film intermediaries are being scanned, it is necessary to identify the quality of the intermediaries and determine whether they will be retained after the digital files have been created. In some cases, the film intermediary is retained because of its archival value, and a digital copy is created for access for use alongside the film intermediary. While it is common to retain both film and digital versions when the film is deemed to be the preservation copy (Chapman, Conway, and Kenney 1999), it is also common to retain them when the film intermediary itself is the collection (e.g., a slide collection) and is significant because it is the original material. Film intermediaries are also retained when the purpose of creating the digital file is simply for access because it lacks sufficiently high resolution for long-term archival storage.

For institutions that are digitizing original materials for long-term archival storage and access, however, the role of the film intermediary as an appropriate surrogate has come into question. Should one digitize from the film intermediary or from the original material? After being digitized, is the film intermediary retained only as a backup to the digital file? If good film intermediaries exist, they are often scanned before one decides how to digitize the rest of the material. Scanning from an existing film intermediary is useful because the original need not be physically handled, but it does require that the film intermediary be of good quality (not faded or scratched) and of first-generation (Ester 1996, as quoted in the Image Quality Working Group 1997).

In cases where no intermediary exists, one must decide whether to digitize from the original, using direct digital capture, or to use traditional photographic equipment, create the film, and scan from the film. Scanning from film is no longer considered the optimal solution for digitizing original two-dimensional works, for two reasons. First, creating photographic intermediates entails the significant added cost of making a good photographic copy. Second, with the advances in digital capture, one can now create a digital record of equal or better quality than film and thus bypass the use of film entirely for two-dimensional objects. Moreover, keeping a film copy of the digital file for comfort purposes is neither necessary nor financially justifiable, except in preservation projects where the film is produced with a specific purpose in mind. Direct digital capture requires an investment in equipment and upgrading studio space that may be initially costly. Nonetheless, after the initial investment is made, the institution can be self-sufficient and build internal capability. If initial investments are prohibitive, then one can consider outsourcing: commercial operations can provide studio setup, staff, and rental equipment if the transport of objects is feasible. Sometimes it is possible to develop a digital studio gradually. Much of the photographic equipment from the traditional studio, such as cameras, lighting, copystands, light meters, and densitometers, can be used in the digital studio.

3.3 Size of the Source Originals

The size of the originals influences the equipment used for digital capture. It may be necessary to adjust or customize the equipment to accommodate the size of the materials. Often, a copystand has to be configured to help maintain the physical relationship of the parts to the whole (as in the case of scrapbooks, albums, and sketchbooks). Working with vendors, institutions have found ways to create cradles for fragile materials or expand the size of the scanning table to accommodate oversized works. Specific considerations relative to size are discussed in the next section.

3.4 Unusual Characteristics and Features

Analyzing the unusual characteristics and critical features of the source material can help determine the best way to develop specifications for digital capture. The following examples provide general guidelines for analyzing materials that vary in size, have physical relationships to a whole, have mounts or mats, or are oversized.

3.4.1 Varied Sizes

Original items that vary in size can be difficult to digitize. The following questions are relevant:

- Will it be difficult to find similar items to group together for capture?

If the objects to be digitized are not similar in size or type, production will be slowed. Rearranging the setup for capture (i.e., moving the camera, changing the lighting, or adding filters) takes time. - What type of setup is required to accommodate objects of different sizes?

Is a larger or wider digital capture table needed? Is there enough storage and staging space to arrange the objects according to size, material, or status (e.g., those captured or edited)? - What file sizes should be considered to accommodate original objects in a range of sizes and shapes? If one opts to capture objects within a particular range of sizes without moving the camera, the file size will have to vary. Additionally, many objects are not proportionately equivalent to the square format of many camera backs. To include all information of that object, one should consider the usable pixel areas of the scanback to determine if it meets one's requirements for file size. (Landsberg 2000).

3.4.2 Physical Relationships to the Whole

When digitizing materials that have physical relationships to the whole, care should be taken to maintain those relationships. For example, when capturing an album, the following issues must be considered:

- What is the relationship of the parts to the whole? Can each page be captured separately, or does one have to accommodate a binder and therefore capture two pages at once?

- Does the album need to be cradled to minimize stress on the binding?

- Do the album pages have intrinsic significance, or is it sufficient to capture the images from each page? Is there a relationship between the spreads that should be maintained, or is an indication of sequence enough to recreate the experience of looking through the album?

3.4.3 Mounts and Mats

Institutions digitizing items that are mounted or attached to mats (e.g., photographs, drawings, or prints) should consider these features in the digital capture process. The following questions should be considered (Hermanson 2000):

- Can the object be safely and easily removed from its mount or mat for capture? If not, can the object be captured while attached to the mount or mat?

- If the object needs to be removed, at what level do conservators, framing personnel, or preparators need to be involved? Who can remove an object from simple paper corners? What about slitting hinges or removing other mounting techniques?

- Is an original mount to be treated as part of the object, and, if so, should it be included in the scan in its entirety?

- If the artist has indicated that a work should be cropped, should the scan reflect this?

- If the original material must remain in its mat, what type of physical adjustments does this require? For example, a copystand or scanning table that can accommodate an open mat may be needed. What kind of scanning complications might arise from reflections off this open mat?

3.4.4 Oversized Materials

Digital capture of oversized materials presents the following challenges:

- What type of digital capture device can accommodate an oversized work? Many customized setup solutions are available.

- What kind of lighting arrangements are necessary to illuminate a large object evenly?

- What type of scanning judgments will be necessary to accommodate the critical features? For example, density and type size can vary greatly with oversized materials such as maps.

- What compression issues need to be considered for both archival storage and dissemination to the user? For example, an oversized map of 22' x 27' captured at 300 dpi in 24 bit becomes a 160-MB file (Yehling Allen 1998).

- How will users' access to such oversized, storage-intensive data be facilitated? Some sites provide tiling, several resolutions, and different details of each image. [7]

Columbia and other institutions that scan large-scale color maps continue to investigate how new digital technologies can be effective in capturing unusual characteristics of source materials. For example, the Library of Congress is using a high-resolution flatbed scanner to digitize directly from the original maps (Fleischhauer 1998). The scanner provides a strong dynamic range, a feature that is important for deciphering minute subtleties in tonal detail found in most maps.

3.5 Condition and Disposition of Originals

An analysis of source materials includes an assessment of their condition.

Materials must be examined for cracks, warping, bending, brittle bindings, or losses. Before digitizing, one must determine whether any pre-capture treatments are required. This is important to consider in advance, because after the item captured, as long as the digital archive exists, the digital file will represent the nature of the original, with its inherent cracks and scratches. It is also necessary to determine whether the original intermediate surrogates will be retained or discarded.

The condition of the source material helps determine what methodology is feasible for digital capture. Two-dimensional materials, such as original photographic prints, glass-plate negatives, drawings, prints, and oversized maps, have special handling requirements. For example, photographs are sensitive to light, maps need to lie flat, and albums must be cradled to avoid stress in the binding. The handling of source materials during capture should not exacerbate existing defects. In conjunction with conservationists, curators, and digital photographers, the institution should create guidelines for handling the objects or surrogates, determine transport procedures, and define procedures for capture of unique objects or surrogates that are sensitive or brittle. (A surrogate may be the only record of a visual object if the original no longer exists or is missing.)

The choice of equipment for digital capture depends on how the object must be handled. Flatbed scanners are more commonly used for scanning film intermediaries than for scanning originals. High-end flatbed scanners can produce both excellent resolution and great dynamic range that often exceed the standards set by the archive. Scanners typically accommodate materials of up to 9' x 11', but some scanners have larger beds. These scanners can hold several surrogates at once, leaving room for the placement of gray-scale and color bars as part of the documentation.

When one is digitizing from the original object, a flatbed scanner is not always appropriate because it requires the material to lie completely flat and not to exceed a specific size (DeNatale and Hirtle 1998). This technique may not be appropriate for sensitive materials because an unmatted original would have to be pulled up from the corners as it is placed on and removed from the glass. The flatbed scanner also makes it difficult to control light and ultraviolet levels because the light sensors are often set within the unit and cannot be changed.

Flatbed scanners, however, can be customized in creative ways. Some companies (e.g., Luna Imaging in Venice, California) have devised ways to customize commercial flatbed scanners to accommodate the overhang of the mat on the unit so that original flat works (if measuring 9' x 11' or less) do not have to be removed from their mats.

When digitizing original materials, a better option is direct digital capture, which uses digital cameras or digital camera backs attached to traditional cameras. It offers more versatility for capturing two-dimensional originals (DeNatale and Hirtle 1998). Using digital camera backs to capture the original material replicates the setups of traditional photographic studios. Because the workspace is flexible, sensitive items can be treated individually, lighting can be adjusted for different sizes and shapes, and procedures for handling and capture can be adjusted (e.g., a decision about whether to place glass over an image during capture can be made for each piece). This approach can also accommodate oversized materials, unusually shaped two-dimensional works, or unique materials such as glass-plate negatives.

From the perspective of conservation, the level of light is a critical concern when digitizing directly from originals. Direct digital capture setups require approximately four times more light than does traditional photography. Using customized setups or different lighting solutions, or both, can ameliorate the concerns about excessive levels of light. For example, halogen light produces a great amount of heat and thus is potentially problematic. Some companies have developed light housings that block most of the heat caused by halogen. [8] These specialized halogen methods were used in the Vatican library project (Mintzer et al 1996). Some museums have also evaluated alternative to halogens [9], such as HMI or fluorescent lights, which conservators have deemed acceptable.

4.0 Developing Appropriate Capture Specifications and Processes

Articulating the project scope and goals through a needs analysis, as well as evaluating the characteristics and conditions of the source images, are preliminary steps to digital capture. These activities also specify the appropriate capture specifications and processes. The specifications and processes for digital capture are then implemented and subjected to a technical evaluation.

4.1 Image-Capture Specifications

Image-capture specifications can be viewed as parameters of the following items:

- the maximum file size for the archive,

- the required file sizes for the derivative images to be used for the access projects,

- the image editing rules, such as contrast adjustments, cropping, and dust spotting, that will apply to both the archival and derivative copies (this includes whether to optimize the images during the capture process or whether this optimization is done outside the digital studio),

- the storage format (e.g., TIFF, PCD, jpeg, flashpix) and medium (e.g., CD, DVD, tape) for storing the digital images for archival purposes, and

- the storage format and medium (or network setup) for storing the image derivatives for access.

4.2 Testing and Evaluation

Once parameters have been defined, it is necessary to determine whether the equipment under consideration is capable of satisfying the specifications. In turn, one can use digital equipment to evaluate the appropriateness of the capture specifications. This can be achieved by testing the digital equipment and testing the images for their intended uses before the project goes live. This evaluative phase can also be used to assess quality-control standards and workflow.

Testing and evaluation involve considerations of the digital capture equipment, the level of detail captured, the image capture and editing capabilities, and the image capture rules.

4.2.1 Digital capture equipment

Whether digitizing from film intermediaries or the original materials, the following steps can be taken to compare digital capture equipment (i.e., flatbed scanners, digital cameras, and digital camera backs). The results should determine an appropriate component for the project (Serenson Colet, Keller, and Landsberg 1997-1998).

- Compare price and quality.

- Evaluate software for image adjustments and color-management tools.

- Check for artifacts (defects in the capture equipment).

- Look at the spectral sensitivity of the digital capture device.

- Evaluate speed of capture time and usable exposure time.

- Review lighting requirements.

- Identify handling concerns that the equipment imposes.

- If necessary, consider customized solutions for the digital studio (e.g., for lighting or a copystand that holds the original).

4.2.2 Level of detail captured

Remember that the level of detail to be captured is directly related to the size of the digital file to be archived. When capturing posters or maps with small text where legibility is critical, the equipment will have to accommodate both the size and detail required. Small originals may not require as high a resolution, but the equipment does need to capture a good dynamic range to pick up shadows or minute subtleties in the original. There may be slight differences in the ability of different devices to capture this information.

4.2.3 Image capture and editing capabilities

While testing the equipment, the user should also experiment with the image capture and image editing capabilities such as adjusting contrast and cropping. Images intended for a specific project, such as a Web site, can be optimized for the particular application at the time of their capture. For such applications, the equipment should be capable of generating an optimal capture. However, if one decides to capture the image and optimize it later, the equipment should be able to capture and store information with more neutral controls (e.g., minimal adjustments and without sharpening). The images can then be uniformly changed for the varied applications. This way, they can be used for many purposes and saved without a specific application in mind.

4.2.4 Image capture rules

In the initial stages, deciding upon the image capture and editing rules can be difficult because it requires projecting how the users will use or access the digital images. Consequently, the process is an iterative one, in which images are captured, edited, and tested for their intended use(s) and the feedback from that process is used in the next iterative step. For example, if the images are used for a Web site, it will be necessary to test whether the resolution quality chosen is appropriate for the intended audience. If the images are used for high-resolution printing, one must work with the publications department or printer to perform printing tests of the digital files. Run a press proof to determine the quality of the image and whether it will be suitable for printing to your institutional standard. Whether the project is done internally or with the help of an outside vendor, the importance of these evaluations can not be overstated. Much will be learned during the tests. In fact, one should expect to continue going through iterative processes of testing and refinement even after the project has moved beyond the evaluative phase.

One of MoMA's digital initiatives provides a good example of the importance of testing and evaluation. MoMA's digitization team includes individuals with a range of managerial and technical expertise. [10] In 1997, MoMA embarked on an ambitious project to digitize 25,000 original photographs from the museum's collection. The digitization team used direct digital capture to digitize works of artists such as Edward Weston, Edward Steichen, and Man Ray. No film is used to create the digital reproduction. These digital surrogates are created with consistent standards that are appropriate to the institution's needs for archiving and access. They are used for the museum's Web site, collections- and exhibitions-management system, educational kiosks, and high-end book production. The museum has succeeded in creating digital files that can surpass the quality of film in tonal fidelity, an important criterion for printing black-and-white, duotone, and tritone photographic books. It has also succeeded in creating an archival capture that can be used for many access projects. The key to MoMA's success was the planning, testing, efficient workflow, and immediate use of the growing digital archive for a variety of applications.

4.3 Efficient Workflow

Efficient workflows are essential to the success of digital projects. Digital projects are research projects, but they also have to be productive to be financially feasible. Traditionally, museums, libraries, and archives have emphasized quality and downplayed the importance of production. But unless productivity rates are acceptable, financial investments cannot be realized. Aware of this, many institutions are realizing they can create a good workflow that will maximize the output efficiency of their studios. To do so requires that everything in the studio-the initial handling of original material, the image capture, the image editing, the movement to image storage, and the eventual image transfer for online access-work as a high-quality assembly line. Digital experts can teach us a great deal about production. Süsstrunk (1998) provides a comprehensive case study of a digital workflow production.

The following are recommendations for an efficient workflow.

- Staff the studio with equipment operators who respect the original material but can also work on repetitive production-oriented tasks.

- Set up a physical workspace that is conducive to the safety of the originals and the workers. Digital capture operators should have comfortable work setups when doing repetitive tasks.

- Balance quality controls with production targets for an efficient workflow.

- Chase the bottlenecks in the production flow: as one is removed, another may arise.

- Keep administrative tasks (storing metadata) to a minimum. Unless a dedicated staff member in the studio is assigned to do this in the assembly line, this task should be done outside the studio.

- Consider ways to continue the workflow outside of the studio (storing the master image, disseminating the derivatives, and creating an enterprise-wide solution for archiving and accessing). An institution that is not set up for the massive organization that is required once the digital file leaves the studio, may have to secure outside services for this purpose.

- Organize work in batches (i.e., by size and by like medium) for maximum efficiency

- Determine where the archive will be stored (internally or off-site)

- Determine where the archive will be managed (internally or off-site)

- Determine whether and when to refresh mediums (preparing for migration)

4.4 Documenting the Decision-Making Process

All decisions associated with planning a digital project must be documented. Keeping a record of the institutional and technical evaluations will help future staff members and others understand why certain decisions were made. Making and revising these decisions should be a group effort, because various degrees of expertise are required at various times. One should also document the procedure for handling materials (i.e., the procedure for when the work enters and leaves the digital studio during which it is captured, and the digital file prepared for access and archival purposes). Analyzing this workflow will help identify bottlenecks. The workflow will continually be revised as the team improves or changes methods in the digital studio [11]

It is also important to document what digital and computer equipment is being employed and the particular settings used. This will be helpful when one has to identify problems with the equipment or go through the process of migrating digital files. Knowing how the digital images were created will provide good information when change is required.

It is important to document the following information related to image capture, editing, and processing:

- date of capture

- type of digital capture and its characteristics

- targets used

- density values on gray-scale and color bars

- color-management profiles

- general profiles that connect the digital equipment in the studio

- contrast or color settings used in the imaging software

- type of lighting used in the capture process

- computer equipment used

- file size, format used (e.g., CD, DLT)

- contrast adjustment recorded (e.g., black-and-white point values during image editing)

- file size, medium used for storage and transfer (e.g., TIFF, JPEG)

- notes relating to opening the file, if any

- notes relating to optimizing the file for varied uses (e.g., recording the contrast or color settings used is an important identifier for those who receive the image)

5.0 Conclusion

The products of a digital imaging project can have many different uses. This paper has stressed the advantages of using a use-neutral, rather than a use-specific, approach whenever possible. Creating a high-quality, long-term archive will ultimately help an institution benefit from the investment of time and money that these projects require. The decision-making process must be documented so that particular justifications become explicit when images are used in specific production tasks (e.g., the publication of an exhibition catalog). The following guidelines are offered for documenting the decision-making process for a use-neutral approach:

- Articulate the project scope and assess the characteristics of the source materials.

- Capture at the highest resolution possible within the constraints of the project's resources.

- Store use-neutral image files (i.e., keep device dependent color correction and other optimizations to a minimum).

- When using the stored digital images for specific production tasks, incorporate the post-process optimizations that are specific to the task at hand (e.g., the production of an exhibition catalog).

This guide has covered the process of planning a digital project, from the initial planning phases, in which the scope and goals are defined, to the analysis of source material(s) to be digitized, to the process of defining image capture specifications and image capture procedures that are then iteratively evaluated. If this process is followed closely, the result should be an efficient workflow and a successful digital project.

Footnotes

1. Refer to Stephenson and McClung (1998) and Gill, Gilliland-Swetland, and Baca (1998) for more information on preparing digital images for end uses.

2. Further information about printing from digital files can be obtained from MoMA's Publications Department (M. Maegraith, M. Sapir, and C. Zichello).

3. For a detailed analysis of migration issues, refer to Hedstrom and Montgomery 1998.

4. Süsstrunk (2000) notes the following: 'The dpi resolution indicated by printer manufacturers usually (but not always) refers to the addressable resolution of that printer. That means that the printer can put that many dots of ink on paper per inch. However, the dots on the paper overlap, and the ink spreads depending on the surface characteristics of the paper (uncoated paper induces more spreading, and therefore has a lower resolvable resolution than coated paper). Therefore, the 'resolvable' resolution of a printer is always lower than the 'addressable' resolution.'

5. In spring 1999, NISO, CLIR, and RLG cosponsored the Technical Metadata Elements for Images Workshop in Washington, D.C. that drew digital practitioners from various institutions. The goal of the workshop was to start setting digital metadata standards in the museum, library, and archives communities.

6. The questionnaire featured in Harvard's Image Scanning Guidelines is helpful in evaluating these issues (Technical Working Group, Visual Information Access Project 1998). Refer also to Conway 1999 and Ayris 1998 for further guidelines on assessing source images for scanning.

7. Refer to Yehling Allen (1998) for Internet sites that provide excellent guidance on how to make large images available to users electronically.

8. Tarsia Technical Industries in Fairfield, New Jersey, is one such company.

9. Photographers from the following institutions have been researching alternative lighting solutions for direct digital capture: Kate Keller and Erik Landsberg at MoMA, David Heald at the Guggenheim, Michael Bevans and Andy Proft at the Johnson Museum of Art at Cornell University, and John Wolffe at the Museum of Fine Arts, Boston.

10. The digitization team included digital technicians, the director and assistant to director of photographic services and permissions, the chief curator, assistant curator, chief fine art photographer, senior fine art photographer, publisher, and post processor.

11. For a detailed matrix for decision-making, the reader is referred to Hazen, Horrell, and Merrill-Oldham (1998).

Acknowledgements:

Although the chapter is written by a single author, it reflects the accomplishments and knowledge of the original digital team that forged new improvements in digital imaging at the Museum of Modern Art. These resources may be used to find out up-to-date information about MoMA's digital projects.

- Mikki Carpenter, Director of Photo Services and Permissions

- Peter Galassi, Chief Curator of Photography

- Sarah Hermanson, Assistant Curator of Photography

- Kate Keller, Head of Fine Arts Imaging

- Eric Landsberg, Manager of Imaging Technologies

- Michael Maegraith, Publisher

Guides to Quality in Visual Resource Imaging

2. Selecting a Scanner

Don Williams

© 2000 Council on Library and Information Resources

- 1.0 Introduction

- 2.0 Source Material Characterization

- 3.0 Background and Definitions of Image Quality Features for Scanner Selection

- 4.0 Understanding Product Specifications

- 5.0 Resources and Methods for Image Quality Verification

- 6.0 Scanner Review

1.0 Introduction

What is a scanner? It is more than a beige desktop box or copy stand camera. It includes the related driver software and application programs that manage it. Some may consider this a technicality, but if the history of desktop printers is a harbinger for capture devices such as digital scanners and cameras, then one must treat the triad of hardware, driver software, and application as the scanner. When choosing a scanner, all of them need to be evaluated and treated as a unit. Table 1 presents common attributes associated with each scanner component. Some of these functions may migrate between categories or from device to device. Most of them affect image quality in some way.

| Hardware | Driver Software | Application |

| Light source | Number of bits per pixel | Color management |

| Platen size | Image processing | Compression |

| Scan speed | Productivity | Scripting |

| Optics and optical path | Calibration | File formats |

| Mechanics | Gamma selection | |

| Sensor | Scaling | |

| Power requirements | OCR | |

| Factory support | Raster-to-vector conversion | |

| Electronics path | Page format retention | |

| Auto-document feed (ADF) | ||

| Transparency adapter |

A scanner must be selected in the context not only of the characteristics of the object to be scanned but also of the intended use of the scanned image. There is no sense in purchasing an expensive scanner when the resulting images will be used only for Web site postings. On the other hand, creating digital master files for unknown future uses requires strict attention to detail and an understanding of how image information manifests itself and can be properly captured.

Section 2 of this guide reviews the salient categories of the source materials; namely content, format, and optical characteristics. Section 3 contains definitions of image quality features. These definitions are used as a basis for a discussion of setting minimal scanning requirements to achieve suitable image quality according to source and intent. Resources and methods to measure or judge these image quality features are described at length. Because not everyone is willing to perform image quality measurements on their own, Section 4 presents information on the interpretation of manufacturers' scanner specifications. Examples of such specifications, along with explanations, are included. The guide concludes with a review of scanner types in terms of image quality and implementation features.

2.0 Source Material Characterization

Knowing your collection and understanding the priorities for digitizing it will help you determine the type of scanner to choose. There are four classes of scanners from which to select: film scanners, cameras, flatbed or sheet-fed scanners, and drum scanners. Except for film scanners, there can be considerable overlap in the content, format, and optical characteristics that each type of device can scan. Table 2 presents source material categories according to these three features.

| Content | Format | Optical Characteristics |

| Color Type: -black and white -full color, -monochrome Photographs Text Halftones Manuscripts Line art Art work Mixed |

Size Three-dimensional (3-D) Film (roll or sheet) Bound/unbound Flexible (film)/inflexible (glass-plates) |

Reflection/transmission Surface characteristics (gloss, texture, flat/ wrinkled) Density range Spatial detail content Color dye/pigment gamut Artifacts/condition (e.g., scratched, fragile, torn, bent, faded) |

Some types of scanners are better at capturing certain of these features than others. Benchmarking a scanner with respect to image quality features will delineate these differences. Definitions of these features and techniques for evaluating their quality are covered in the remainder of this guide.

3.0 Background and Definitions of Image Quality Features for Scanner Selection

In its purest form, image quality can be judged by the signal and noise characteristics of the image or scanning device under consideration. The ratio of signal to noise is often used as a single measure for image quality; that is, the greater the signal-to-noise (S/N) ratio the better the image quality. However, because one person's signal is another person's noise, the use of SNR as an image quality metric is difficult to manage. The interpretation of signal and noise becomes too broad and, in turn, ambiguous. S/N can be a useful measure for characterizing scanner performance; however, translating this measure into absolute image quality is difficult.

Consequently, image quality features are dealt with by more tractable imaging performance categories. There are five such categories: tone reproduction, color reproduction, resolution, noise, and artifacts. All yield objective measures that contribute to overall image quality in complex ways. For instance, a viewer does not perceive tone reproduction or resolution but rather the psychophysics of lightness, contrast, and sharpness. He or she then creates a mental preference for the image. Although these categories cannot measure image quality directly, they do serve as a good high-level model for evaluating image quality. The remainder of this section is devoted to detailed definitions of these image quality features. It will serve as a basis for further discussions on specifications and tools for scanner selection.

3.1 Tone Reproduction

Tone reproduction is the rendering of original document densities into luminances on softcopy displays or into densities in hardcopy media. It is the foundation for the evaluation of all other image quality metrics. It determines whether a reproduced image is too dark, too light, and of low contrast or of high, and implicitly assumes the evaluation of neutral gray tones over large areas of an image.

The seductive beauty of a photograph by Ansel Adams or Irving Penn is primarily due not to the image content, composition, sharpness, or low noise but rather to the remarkable reproduction of tones-from gleaming highlights to deep-shadow details, with all tones in between. Tone reproduction is the welcome mat to the evaluation of all other image quality metrics. Although on the surface, tone reproduction seems a simple job of tone management, the subtleties of the viewing environment and cultural and professional preferences often make it an art.

For scanned image files, tone reproduction is somewhat of a misnomer unless a final viewing device is assumed. This is because the capture process in a scanner is simply that-a capture step. It is not a display that reproduces light. Tone reproduction, by contrast, requires both capture and display. How then does one select a scanner to accommodate the best possible tone reproduction when the scanned data generally may be reproduced on any number of display types and for a number of viewing preferences?

Three objective image-quality attributes of a scanner-the opto-electronic conversion function (OECF), dynamic range, and flare-ultimately and universally affect all tone reproduction. The scanner's driver software often controls the OECF; dynamic range and flare are inherent in the hardware.

The OECF is a term used to describe the relationship between the optical density of a document and the average digital count level associated with that density, as detected by the scanner. The OECF is the first genealogical link between an original object and its digital offspring and is usually controlled by the software driver. The extent to which the driver software allows the user to control the OECF and documentation on how the driver software accomplishes this are important features to consider when selecting a scanner.

Dynamic range is the capacity of a scanner to distinguish extreme variations in density. As a rule, the dynamic range of a scanner should meet or exceed the density extremes of the object being scanned. Because specifications for dynamic range are frequently overstated, the means to objectively verify these claims should be at hand. This will be covered in Section 4.3.

Flare is non-image-forming light with little to no spatial detail content. It manifests itself by reducing the dynamic range of a device and is generally attributed to stray light in an optical system. Documents in which low densities predominate and devices requiring large illuminated areas, such as full-frame digital cameras, generally suffer from high flare. These two conditions should be kept in mind when selecting a scanner. Whenever large amounts of light, even if outside the scanner's field of view, are involved in imaging, flare may become a problem.

See also, Tone Reproduction, Guide 4.

3.2 Resolution

Resolution is the ability to capture spatial detail. It is considered a small-area signal characteristic. Before the advent of electronic capture technologies, resolution was measured by imaging increasingly finer spaced target features (that is, bars, block letters, circles) and by visually inspecting the captured image for the finest set of features that was detectable. The spatial frequency of this set of features was considered the resolution of that capture process. Measured in this way, resolution values depended on the target's feature set, image contrast, and inspector's experience. The units used for reporting resolution were typically line pairs per millimeter.

The resemblance of these units to the spatial sampling rate units of a digital capture device is unfortunate and continues to be a source of confusion about just what resolution is for a digital capture device. For digital capture devices, resolution is not the spatial sampling rate, which is characterized by the number of dots per inch (dpi).

The type of measurement described above is considered a threshold metric, because it characterizes the limiting spatial detail that is just resolvable. It reveals nothing about how the lower spatial frequencies are handled in the capture process; in other words, the extent to which they are resolvable. It is largely a pass/fail criterion. Because of this shortcoming, as well as feature, contrast, and inspector dependencies, resolution measurement done in this way is not robust. A supra-threshold metric is needed that removes not only the feature set and contrast dependencies but also the inspector's subjectivity.

The modulation transfer function (MTF) is a metric that allows one to measure resolution in a way that satisfies these criteria. The MTF is a mathematical transformation of the line-spread function (LSF). The LSF is a fundamental physical characterization of how light spreads in the imaging process, and for spatial resolution measurements, it is the Holy Grail. A detailed explanation of MTF, its value, and how it is used can be found in Image Science by J.C. Dainty and R. Shaw (1974).

3.3 Color Reproduction

Color reproduction, like tone reproduction, is a misnomer for scanners because colors are only being captured, not reproduced.

A more accurate term has been coined for the potential color performance or fidelity of a digital capture: the metamerism index. International Standards Organization (ISO) work is under way to propose a metamerism index that would quantify the color-capture

performance of a device relative to that of a human observer. The goal would be for the scanner to 'see' colors in the same

way as humans do. A metamerism index of zero would indicate equivalence between the scanner's color performance and that of

a human observer. Calculation of the metamerism index requires knowledge of the device's color channel sensitivities as well

as the illumination type being used, two pieces of information not normally provided by scanner manufacturers. In the absence

of such a measure, a suitable surrogate for color capture fidelity, called average Delta E*, or  E*, is often used.

E*, is often used.

E* makes use of a standardized perceptual color space called CIELAB. This color space, characterized by three variables-L*,

a*, and b*-is one in which equal distances in the space represent approximately equal perceptual color differences. L*, a*,

and b* can be measured for any color and specified illuminant. By knowing these values for color patches of a target and comparing

them with their digitized values, a color fidelity index,

E* makes use of a standardized perceptual color space called CIELAB. This color space, characterized by three variables-L*,

a*, and b*-is one in which equal distances in the space represent approximately equal perceptual color differences. L*, a*,

and b* can be measured for any color and specified illuminant. By knowing these values for color patches of a target and comparing

them with their digitized values, a color fidelity index,  E*, can be measured.

E*, can be measured.

Finally, gray-scale uniformity may be considered a form of color fidelity. Gray-scale uniformity is a measure of how well neutral tones are detected equivalently by each color channel of a scanner. Although it can also be measured with the CIELAB metric, there are often occasions where the L*a*b* values are not available. In such cases, a first step in measuring color fidelity is to examine how well the average count value of different density neutral patches matches across color channels.

See also, Color Accuracy, Guide 3 and Color Reproduction, Guide 4.

3.4 Noise

For photographic film, noise is often referred to as 'grain' or 'granularity,' since its appearance is granular or random in nature. Like film, digital scanners and cameras have sources of noise related to signal detection and amplification. The nature of this noise is similar to that of film and can be defined as unwanted pixel-to-pixel count fluctuations of a random or near-random nature.

Digital capture devices, unlike film, may also be associated with non-random or fixed-pattern noise sources. For area-array sensors, these include pixel, line, and cluster defects from the detector. For better cameras, these defects are identified at manufacturing and digitally masked in the finished image file. For linear or line-array scanners, poorly corrected sensor defects manifest themselves as streaks in the image. While these are often classified as artifacts, their effects are ultimately integrated into the noise measurement.

Just as a scanner's resolution performance can be characterized via the MTF, noise measurements can be characterized according to spatial frequency content. The term for such a measurement is noise power spectrum (NPS). The photographic community implicitly uses NPS to calculate a singular granularity noise metric by requiring that noise measurements be done under conditions that weight the noise at spatial frequencies consistent with the human visual response (Dainty and Shaw 1974).

See also Noise, Guide 4

3.5 Artifacts

Artifacts are best categorized as a form of noise-correlated noise, to be specific. Because artifacts do not appear as random fluctuations, they do not fit most observers' perceptions of noise and hence are given their own image quality category. Most artifacts are peculiar to digital imaging systems. The most common are nonuniformity, dust and scratches, streaks, color misregistration, aliasing, and contouring/quantization. At low levels, for short periods of viewing, artifacts are considered a nuisance. At moderate levels they can render a digital image defective, especially once the observer has become sensitized to them. The most common types of artifacts may be described as follows:

3.5.1 Nonuniformity

Nonuniformity is a large area of fluctuation in illumination caused by uneven lighting or in-camera light attenuation such as vignetting. Nonuniformity across an image is extremely hard to detect without image processing aids; the illumination can vary as much as 50 percent from center to corner before it can be detected without aids. Flatbed scanners, drum scanners, and film scanners using linear arrays tend not to suffer from nonuniformity problems, in part because their illumination source is often accounted for at scan time. Digital cameras, however, can suffer considerable nonuniformity because of lens performance or improper illumination set-up by the user.

3.5.2 Dust and scratches

While dust is a function of scanner, document, and environment hygiene, the extent to which scratches in film or on a flatbed platen are hidden is often overlooked as a scanner selection criterion. Scratch suppression in film scanners is dependent on proper illumination design. Scratches are increasingly being suppressed after capture through scratch-detection methods and then digitally corrected with interpolation algorithms.

3.5.3 Streaks

Streaks are localized line nonuniformities in a scanned image. Because of the rectangular grid format of digital images, streaks usually occur in horizontal or vertical directions and are often more dominant in scanners using linear-array detectors. Occasionally, repetitive streak patterns, called rastering, can occur across a scanned image.

3.5.4 Color misregistration

Color misregistration is the spatial misalignment of color planes. It can occur because of poor lens performance or the optical-mechanical methods used to capture the image. It is best recognized by color fringing at high-contrast sharp edges and color scans of halftone images. It is most often a problem with inexpensive linear-array scanners. Several years ago, this artifact was not worth considering because it rarely occurred at a significant level. With the advent of less expensive parts and manufacturing shortcuts, however, color registration has become more of a problem and should be monitored.

3.5.5 Aliasing

Aliasing occurs because the sampling rate is insufficient for the spatial frequency content of the object being scanned. It occurs only in digital images. For repetitive features such as halftones or bar patterns it manifests as a moiré pattern. It is also recognized in nonrepetitive features by jagged-edge transitions ('jaggies'). The potential for aliasing can be detected by slanted-edge MTF measurements that are described in Sections 5.3 and 5.5.

3.5.6 Contouring/quantization

Contouring is defined as the assignment of a single digital count value to a range of densities that vary by more than one just-noticeable difference in density. It occurs because of insufficient bit depth in a captured image. It is most noticeable in slowly varying portions of an image and manifests itself as an abrupt and unnatural change in density. Contouring is prevented in most digital capture devices with internal bit depths of 10 bits or greater.

4.0 Understanding Product Specifications

After the requirements for a scanner have been defined, it would seem a simple task to review several manufacturers' product specification sheets and choose the scanner that best meets those criteria. This is certainly true in the case of easily verifiable items, where there is no ambiguity about definition (for example, power requirements, physical dimensions, and sensor type). However, for most criteria related to image quality, this is not the case. Because there are few strict or unique standards for digital capture imaging performance criteria such as resolution, dynamic range, noise, or color fidelity, a manufacturer can choose how it markets a device's capabilities. In the absence of means by which to independently verify specification sheet claims, buyers should remember two rules:

- 1) Approach all imaging performance claims on specification sheets with caution. They are often confusing, inflated, or misleading.

- 2) Generally speaking, you get what you pay for. It is wise to consider a manufacturer's reputation when selecting a scanner, although there are always exceptions.

4.1 Resolution: DPI or Image/File Size?

Specification sheets can offer resolution for digital capture devices in terms of spatial sampling rate or of image or finished file size.

4.1.1 Spatial Sampling Rate

Where document imaging is the presumed application, resolution is in terms of the spatial sampling rate, which may be defined as spatial frequency of pixels on the document. This is the case for flatbed document scanners, drum scanners, copy stand cameras, and microfilm scanners. The rate is cited as dpi, ppi (pixels per inch), or, infrequently, spi (samples per inch). The sampling rate is a necessary, but not sufficient, condition for actual detail capture in sampled imaging systems. Knowing the extent to which light spreads in a capture device by way of the LSF or MTF provides this sufficiency. This is why product specifications for resolution do not enable the user to draw any meaningful conclusions regarding resolution performance. The common terms for sampling rate in specification sheets are optical resolution and addressable resolution.

Occasionally, document scanner resolution is specified differently in the two different directions of the scan; for instance, 600 x 1200 dpi. Although both values are considered optical resolutions, the higher one is usually achieved through a higher sampling rate that outpaces the MTF performance. The lower of these two values, associated with the sensor pixel pitch, is probably a better indicator of true detail capture abilities. Most resolution claims greater than 600 dpi should be viewed with suspicion.

One of the ways of inflating true resolution is the use of interpolated resolution. Interpolation is a powerful and appropriate tool for many image-processing needs, (e.g., isolated defect concealment or benign image scaling); however, using it as a 'pixel-filling' utility to inflate resolution claims is misleading at best. This is because practical interpolation methods are imperfect predictors of missing pixel values. Resolutions of 1800-9600 dpi, sometimes touted by manufacturers, are possible only with the most expensive laboratory equipment or with customized devices such as drum scanners or microdensitometers.

Prudent and successful interpolation methods are found in color filter array (CFA) digital cameras. Unlike the interpolation technique cited above, which fills in pixels where none existed before, CFA interpolation schemes rely on correlated knowledge of the color that actually was sampled at that location. Because resolution between color channels often correlates well, these methods have been shown to be almost lossless for moderate image interpolation.

4.1.2 File/Image Size

For digital cameras having no document reference, resolution is specified in terms of finished file size (e.g., 18 MB), intermediate file size (e.g., 6 MB), or image sensor size (e.g., 2048 lines x 3072 pixels). The path for relating one to another requires knowledge of the number of bits per pixel per color and the total number of colors, as well as some familiarity with the sensor technologies used. This method of resolution specification can be confusing to interpret. The calculation for a finished file is as follows:

| (# lines x # pixels) x (# bits/pixel) x (# colors) x (# bytes/bit) = finished file size |

| (2048 x 3072) x ( 8 ) x (3) x (1/8) = 18,874,368 bytes » 18 MB |

File size determination is an imperfect discipline, largely because of the loose definition that the imaging community applies to the term megabyte. Technically, a megabyte is one million bytes. The imaging community, however, has taken the nearest integer power of two and used this as a basis of calculation. Under this system, a megabyte is (220), or 1,048,576 bytes. Using this number as the divisor in the above equation will yield exactly 18 MB.

Occasionally, cameras with CFA color sensors capture a small intermediate file that is later processed into a larger finished file on a computer. The smaller intermediate file is often specified for purposes of file storage advantages. For example, in the above calculation, there is effectively only one color channel in the intermediate file. Therefore, the intermediate file size is only 6 MB.

Sometimes, very large file sizes are specified that are not consistent with the calculation in the equation just presented. This often occurs when 12 bit/pixel files are created. Since 8-bit (i.e., 1 byte) file storage is standardized, an extra byte is required to store the remaining 4 bits. This leaves four remaining 'empty' bits. Although there are ways to 'pack' these bits efficiently, it is sometimes more convenient not to do so. Therefore, the extra 4 bits/pixel tag along. They have no useful image information associated with them, but they do inflate the finished file size.

As a tattoo for this section, many digital cameras with resolutions lower than 1 Mpixel often cite resolution in terms of equivalent monitor resolution. Common examples are as follows:

| Term used | # pixels x # lines |

| VGA | 640 x 480 |

| SVGA | 800 x 600 |

| XGA | 1024 x 768 |

| SXGA | 1280 x 1024 |

4.2 Bit Depth: Gray Levels, Shades of Gray, Millions of Colors

Specification sheets commonly refer to several different bit depths or to the associated number of gray levels or colors. This can be confusing. The source of this confusion often lies in whether the manufacturer is citing

- the number of bits for one color channel,

- the number of bits for all color channels, or

- the number of internal bits versus the number of finished-file bits.

# gray levels = 2NFor example, an 8 bit per color channel device would potentially yield a maximum of

256 gray levels = 2(8 bits/color channel)The relation between the number of potential colors and the number of bits per channel (N) and number of colors channel (C) is

# of potential colors = 2CxN (e.g., more than 16 million colors = 2 8 bits/ channel x 3 color channelsFor artifact-control purposes, almost all digital capture device manufacturers capture the initial raw data with more internal bits than will be reported to the user in the finished file. This is common engineering practice. As an example, internal captures (that is, A/D conversion) and processing at 10 bits/pixel/color channel are common. It is not until the end of the internal processing chain that the data are converted to 8 bits/pixel channel. For a three-color scanner, this means that 30 bits/pixel (10 bits/pixel x 3 color channels) are maintained initially and finally reported as 24 bits/pixel (8 bits/pixel x 3 colors).

Increasingly, manufacturers are citing internal bits as a means of distinguishing their product without revealing that the bits are inaccessible. This means, for instance, that billions (e.g., 230) of potential colors are claimed for some scanners even though users cannot realize them. This approach holds even for binary scanners (1 bit/pixel). The initial internal capture is done at 8 bits/pixel. This extra bit depth is then used to make intelligent thresholding decisions for optimal binary image quality. This has always been the practice, but only recently has it been cited in specification literature.

4.2.1 Bit Depth Effects on Speed

The greater number of bits accessed brings with it not only the obvious trade-off of increased storage requirements but also the less obvious trade-off of scan time. Some manufacturers that allow access to imagery at various bit depths cite fast scan times associated with the lowest bit depth. Access to higher bit-depth imagery will require longer scan times and will lower productivity.

Bit-depth specifications do not necessarily provide information about the quality of the signal being digitized. Are the bits being used to digitize image data or noise? In all scanners, portions of the bit capacity are used to correct for nonuniformities in the detector. Scanners using inexpensive parts often require a larger portion of the total bit depth for detector compensation. The bits used for this compensation are not usable for image data, but the user has no way to know this.

4.3 Dynamic Range: Maximum Density, # f-stops